This topic is extremely important for GATE. Also, most of us find it confusing. In this series, we will try to cover the basic essentials required to solve GATE questions (trust me, not much depth in this topic is needed for GATE!). The topics will include the following:-

- Race Condition and The Critical Section Problem

- Solutions to the 2-process Synchronization Problem

- Hardware solutions including the Test, Set and Lock (TSL)

- Semaphores, Mutexes and the difference between them

- Applications of Semaphores

- A couple of classic synchronization problems

- Very important previous GATE questions

Race Condition

Most of us are familiar with the notion of a

process. A process can be thought of as a

program in execution. In a uni-processing environment, only 1 process can be running at a time. Suppose there are 10 processes to be executed on a certain computer. Process 1 starts executing and in the midst of its execution, it requires some I/O time. So,

during this time, if we keep the CPU idle, it will be a wastage and we will obtain reduced throughput. What we can do is, some other process can execute on CPU in the mean time. In this way, the CPU is always kept busy (that is the aim).

But this constant getting-in and throwing-out of processes from the CPU comes with a price.

When there are so many processes executing with an effective idea of togetherness, consistency can be hard to achieve, especially when there are shared variables involved. Also, depending on order of execution of processes, the results obtained may be different. We will examine all these ideas.

Let us consider the famous

Producer-Consumer problem to understand what a race condition is. While we do not need to analyze the exact code for this problem, we must understand intuitively what the problem is. In the Producer-Consumer problem, there are

2 processes, a

Producer process and a

Consumer process. Also, we have an

array/buffer of items, let us consider the array to be infinite for now.

To maintain the number of items available in the buffer/array, a

shared variable count is needed. It is a shared variable because both the processes should be able to modify it. When the Producer process produces an item and places it on the buffer, it should increment by 1 (execute the statement

count++). When the Consumer process takes up an item from the buffer, it should decrement count by 1 (execute the statement

count--).

But count++ and count-- are not atomic statements at the Assembly Language Level. In other words, these statements are individually executed as a series of 3 atomic statements shown below:-

Let initial value of count be 18. We have numbered the statements from 1 to 6 for ease of analysis. Note that atomic statements can be individually in any order, which means that after execution of statement 1, the Producer process may be pre-empted while the Consumer process executes statement 4. So, (1,2,3,4,5,6) is a possible execution sequence. Also, (1,4,2,5,3,6) is another possible execution sequence.

When the

sequence (1,2,3,4,5,6) occurs, the following happens:-

- reg1 = 18

- reg1 = 18+1 = 19

- count = 19

- reg2 = 19

- reg2 = 19-1 =18

- count = 18 (final value of count is 18-->correct)

So we find that the desired result is obtained. Now, let us see what happens when the sequence (1,2,4,5,3,6) occurs:-

- reg1 = 18

- reg2 = 18

- reg1 = 18+1 = 19

- reg2 = 18-1 = 17

- count = reg1 = 19

- count = reg2 = 17 (final value of count is 17--> incorrect)

If we interchange the final 2 statements in the above sequence so that (1,2,4,5,6,3) occurs, the following happens:-

- reg1 = 18

- reg2 = 18

- reg1 = 18+1 = 19

- reg2 = 18-1 = 17

- count = reg2 = 17

- count = reg1 = 19 (final value of count is 17--> incorrect)

So, in both the above cases, the final value of count is inconsistent and undesired. Depending on which process executes last, the final value of count is changing. This is called a race condition.

Race condition is a situation where several processes access and manipulate shared data concurrently. The final value of the shared data depends on the process which finishes last. Concurrent processes must be synchronized to prevent race conditions.

The Critical Section Problem

Suppose there are n processes, each having a particular section of code where it accesses shared variables. So, each process has a code segment where it accesses shared data which is called the critical section. We must ensure that when 1 process is executing in its critical section, no other process must be allowed to execute in its critical section.

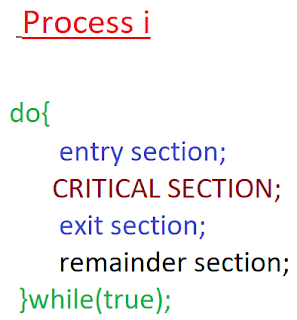

The general code structure of process i can be shown by the diagram below:-

The entry section is usually used to obtain locks on shared variables and permission to access the Critical Section.

The exit section is usually used to release locks on shared variables so that other processes can access their critical sections.

The remainder section is a code segment not related to the critical section.

The code is shown in a do-while loop with the assumption that a process will end when it executes some code in its remainder section which causes it to terminate.

Critical Section Problem Solution Requirements

Any solution to a critical section problem must satisfy the following 2 conditions mandatorily:-

- Mutual Exclusion: When a process i is executing in its critical section, no other process should be allowed to enter its critical section.

- Progress: If no process is in its critical section and we have some process which is interested to enter its critical section, only those processes not in their remainder section should be allowed to participate in the decision of which process will get the chance to enter the critical section next. In other words, only those processes which are interested to enter the critical section should be able to influence which process gets the chance to enter the critical section. A non-interested process should not be able to block an interested process from entering the critical section.

**Note: If there is INFINITE WAITING TIME TO MAKE ABOVE DECISION (which also includes the notion of DEADLOCK) to make above decision, that means there is NO PROGRESS. This particular point is very important for GATE.

A solution to the critical section problem should ideally satisfy another condition known as Bounded Waiting the definition of which is not the same across textbooks. We can think of bounded waiting as the assurance implicit to the process that there will be a bound (finite upper limit even if it is a very high number) on the number of times other processes are allowed to enter the critical section after that process has requested to enter the critical section and before that request is granted.

In this post, we have seen up to the

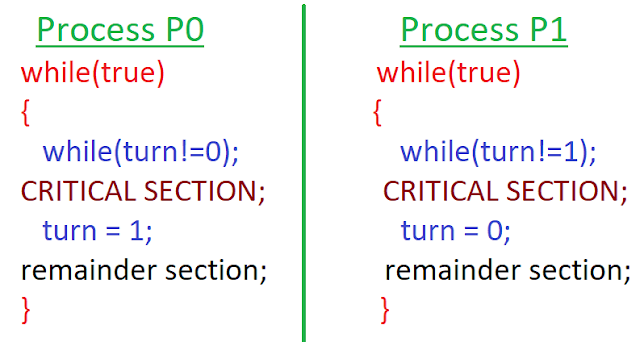

Critical Section Problem. In the next post, we will see various solutions to the

2-process Synchronization problem where we have only 2 processes.

Click here to read Part 2

Was it a nice read? Please subscribe to the blog by clicking on the 'Follow' button on the right hand side of this page. You can also subscribe by email by entering your email id in the box provided. In that case, you will be updated via email as and when new posts are published.Also, please like the Facebook Page for this blog at Gate Computer Science Ideas. Cheers!